Redundant Array of Inexpensive Disks (RAID)

In 1987, Patterson, Gibson and Latz at the University of California at Berkeley published a paper entitles “ A Case for Redendant Arrays of Inexpensive Disks (RAID)”

A category of disk drives that employ two or more drives in combination for fault tolerance and performance, presenting itself as one logical disk to OS.

RAID disk drives are used frequently on servers but aren't generally necessary for personal computers.

Striped Disks

Increase I/O performance

Split I/O transfer across the component physical devices

Performing I/O in parallel – balancing I/O

Create very large filesystems spanning more than one physical disk

General consideration to striped disk configuration:

· The individual disk in the striped filesystem should be on separate disk controllers.

· Individual disk must be identical device: same size, same layout, and same model even. Otherwise, the smallest one is often what is used for the filesystem.

· In no case should the device containing the root filesystem be used for disk striping.

· The stripe size is important.

o For large enough I/O operations, large stripe size result in better I/O performance.

o Large strip size could be less efficient allocation of available disk space.

The different RAID levels

· Level 0 -- Striped Disk Array without Fault Tolerance: Provides data striping (spreading out blocks of each file across multiple disk drives) but no redundancy. This improves performance but does not deliver fault tolerance. If one drive fails then all data in the array is lost.

· Level 1 -- Mirroring: Provides disk mirroring. Level 1 provides twice the read transaction rate of single disks and the same write transaction rate as single disks.

· Level 2 -- Error-Correcting Coding: Uses Hamming error correction codes, is intended for use with drives which do not have build-in error detection. Not a typical implementation and rarely used, Level 2 stripes data at the bit level rather than the block level.

· Level 3 -- Bit-Interleaved Parity: Provides byte-level striping with a dedicated parity disk. Level 3, which cannot service simultaneous multiple requests, also is rarely used.

· Level 4 -- Dedicated Parity Drive: A commonly used implementation of RAID, Level 4 provides block-level striping (like Level 0) with a parity disk. If a data disk fails, the parity data is used to create a replacement disk. A disadvantage to Level 4 is that the parity disk can create write bottlenecks.

· Level 5 -- Block Interleaved Distributed Parity: Provides data striping at the byte level and also stripe error correction information. This results in excellent performance and good fault tolerance. Level 5 is one of the most popular implementations of RAID. At least 3 drives are required for RAID-5 arrays.

· Level 6 -- Independent Data Disks with Double Parity: Provides block-level striping with parity data distributed across all disks.

· Level 0+1 – A Mirror of Stripes: Not one of the original RAID levels, two RAID 0 stripes are created, and a RAID 1 mirror is created over them. Used for both replicating and sharing data among disks.

· Level 10 – A Stripe of Mirrors: Not one of the original RAID levels, multiple RAID 1 mirrors are created, and a RAID 0 stripe is created over these.

· Level 7: A trademark of Storage Computer Corporation that adds caching to Levels 3 or 4.

· RAID S: EMC Corporation's proprietary striped pairty RAID system used in its Symmetrix storage systems.

Hardware RAID

Controller card based hardware raid

Example:

|

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

Intelligent, external raid controller

Example: HP surestore virtual array 7100

key benefits and features

complete reliability

· End-to-End Data Integrity provides full disk array data integrity

· AUTORAID 5 DP allows for two disks to fail simultaneously before data loss

occurs

high availability

· Dual, hot swappable redundant virtual array controllers ensure maximum uptime

· Native Fibre Channel host and drive interfaces

economical solution

· Management costs are kept low by sharing the virtual array among

heterogeneous operating systems, and by remote management

upgrade without interruping the network

· Add extra storage capacity and functionality on-line, instantly

self-managing

· The HP Surestore VA7100 intelligently spreads user data across available

disks to increase performance and avoid 'hot spots'

Software RAID

Unix utilities

· Example:

§ MD driver in Linux Kernel supports RAID 0/1/4/5

· See The Software-RAID HOWTO

§ Solaris Solstice DiskSuite and Vertas Volume Mangager offer RAID 0/1 and 5.

· See Solaris Volume Manager Administrion Guide

· Advantage:

o No addition cost of controller

o You don’t have to install, configure or manage a hardware raid controller.

· Disadvantage:

o OS dependent

o Occupy system memory, consume CPU cycle

o degrade overall server performance, especially with parity

o Boot volume limitation

o Level support limitation

o Advance feature like hot spares and drive swapping

o Operating system, software compatibility issue and reliability concerns

Storage Area Network (SAN)

A SAN, Storage Area Network (SAN), is a high-speed network of shared storage devices. A storage device is a machine that contains nothing but a disk or disks for storing data. A SAN's architecture works in a way that makes all storage devices available to all servers on a LAN or WAN. As more storage devices are added to a SAN, they too will be accessible from any server in the larger network. In this case, the server merely acts as a pathway between the end user and the stored data. Because stored data does not reside directly on any of a network's servers, server power is utilized for business applications, and network capacity is released to the end user.

SAN components

Storage devices

Switches

Host Bus Adaptors (HBAs)

Why a SAN?

· Unprecedented levels of flexibility in system management and configuration

· Servers can be added and removed from a SAM while their data remains in the SAN

· Multiple servers can access the same storage for more consistent and rapid processing.

· The storage can be easily increased, changed or re-assigned.

· Multiple compute servers and backup servers can access a common storage pool. SAN offers configurations that emphasize connectivity, performance, resilience to outage, or all three.

· SAN brings enterprise-level availability to open system servers.

· SAN improve staff efficiency by supporting a variety of operating systems, servers and operational needs.

· Support high-performance backup and rapid restores.

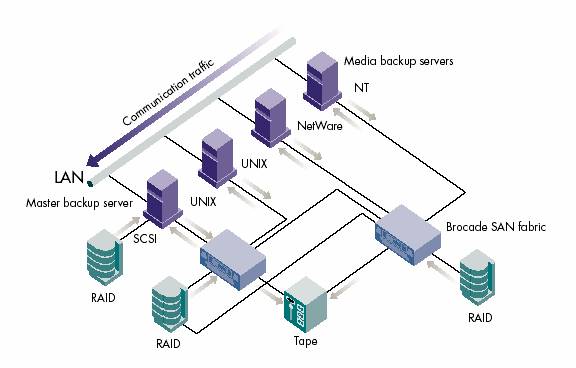

Example: A basic SAN infrastructure using LAN-free data backup to reduce network traffic

Example: McKesson Hadley group’s SAN

Components:

EMC Symmetrix Storage Array (close to 300 78G drive with total 24TB)

EMC Connectrix SAN switch (2: 32ports and 64ports)

EMC Celerra File server (dual network Gigbit connections, 3 data movers)

Usage:

Allocate space on the fly

Serve the data storage for NFS server

Replicate data

Problems

Not working with Tru64 4.0g with more than 7 devices

Creating fake device names and multiple reboots on Tru64 4.0x

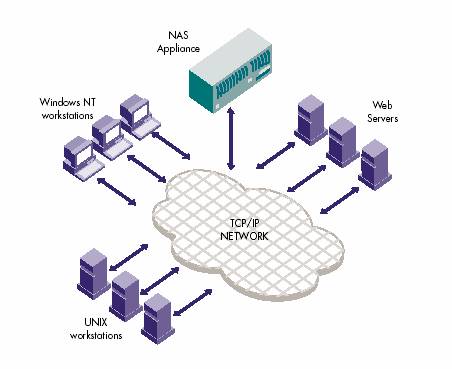

Networked Attached Storage (NAS)

Is designed to provide data access at the file level.

Is based on TCP/IP

Provides Special OS

Example: McKesson Hadley group’s NAS

Component:

Net Appliance (with two disk shelves of total 150G)

Usage:

Home dir

Source code repository

Snapshots

Problems:

Local tape backup took too long

Network bottleneck

Could not be extended more

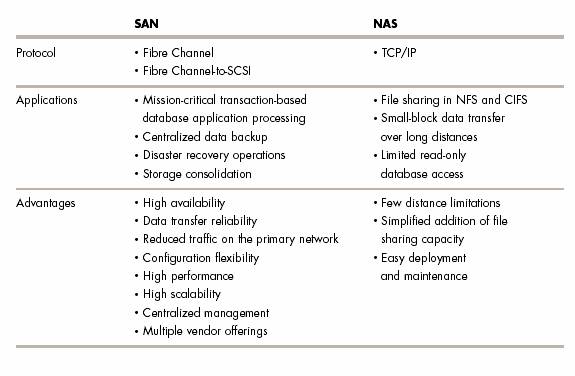

The comparison between SAN and NAS: